Blog

Blog-posts are sorted by the tags you see below. You can filter the listing by checking/unchecking individual tags. Doubleclick or Shift-click a tag to see only its entries. For more informations see: About the Blog.

credits Landesmuseum,Illuminarium,thingshappen,projektil,colorsound

During the Christmas Season Illuminarium show takes place in the Zurich National Museum.

For that occasion a 360 degrees mapping was custom created, with its Interactive Multi player Pinball game.

--

Cliente: Illuminarium Zurich -- https://illuminarium.ch/

Dirección de arte y producción: Ivan Val

Diseño: Itziar Arriaga https://www.thingshappen.es/work/tracking-7g28c

Programación y hardware: www.colorsound-ixd.com Abraham Manzanares, Paqui Castillo

Desarrollo gráfico 2D: Javier Burgos, Ignacio Méndez

Desarrollo gráfico 3D. Mario Jimenez, Alberto Vega

Sonorización: Sergio Geval

It is my first project and I hope it will be the first of many projects.

The project is very simple, a two-day event in which vvvv receives data from some bicycles in real time through an api by httpget and shows power and speed data on some LED totems. With osc I control everything. I am really very excited about this !!!!

credits

Concept, production and video: VOLNA

Technical support: 2A Production

Lighting operator: Mark Zaicev

Photo and camera: Polina Korotaeva, VOLNA, Denis Denisov

Special thanks: Denis Filippov, James Ginzburg

Commissioned by Roots United

© VOLNA (2021)

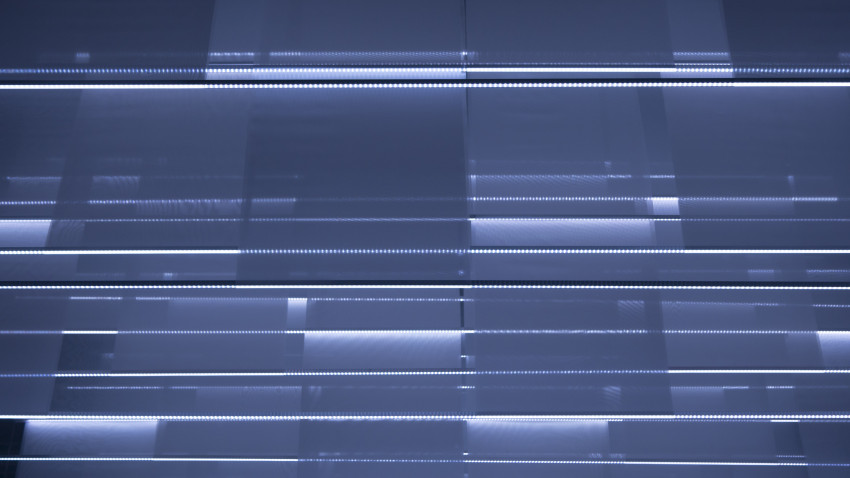

Nebula fills the space above the dance floor and bar of the club K-30 in St. Petersburg. Its image is inspired by a variety of interstellar nebulae that emit and scatter light.

Dynamic light spills through a translucent material from the top of the dance floor, creating the effect of a floating astronomical cloud that shimmers across the entire visible spectrum of colors. The installation consists of aluminum LED lines that serve as loads weighing down on a polyamide translucent fabric. The dimensions of the installation are 5×3×11 m.

https://volna-media.com/projects/nebula

https://www.instagram.com/keine_angst/

credits

Concept and production: VOLNA

Technical support: 2A Production

Photo and video: VOLNA

Commissioned by Roots United

© VOLNA (2021)

At the heart of this installation is the image of reeds growing by the water and the unique sounds they make when the wind is blowing. We designed thin technological “stems” and kinetic “inflorescences” in the characteristic shape of reeds. They are controlled by the force of the wind, creating an effect of flickering light reacting to air currents. The installation is located right beside the water at the K-30 club promenade and lets viewers experience a futuristic reeded wilderness with a view of the Neva River.

https://volna-media.com/projects/reeds

https://www.instagram.com/keine_angst/

credits Sune Petersen aka. MOTORSAW @sunep Thomas Li

This year a new collaboration has seen the light of day. The collaboration is between Sune Petersen and Thomas Li.

We call ourselves LI //// MOTORSAW.

Thomas Li perform live on a modular synth setup with various additions.

MOTORSAW performs live on a generative audiovisual setup live texture feedback based visuals that are being sonified.

The past weeks we had our first concerts. Here is the recording from the latest concert.

Follow https://li.motorsaw.dk/

The gyrovagues were wandering, marginal and undisciplined monks, outsiders of the community and without a fixed residence; they lived off the charity and hospitality of others.

Lorenzo de Medici was a poet, patron, statesman, philosopher and seducer. He sought aesthetic enjoyment and contemplation rather than action.

It is unlikely that they coincided; neither in time nor in space.

credits Juan Hurlé, Dj Stingray 313

Real Time generative graphics by Juan Hurle, made with love in VVVV using Vl.Audio and the HLSL Language.

Music: Dj Stingray 313

Label: Micron Audio

Lettering : Leiras Ownlife

Stingray 3d Model based on Nicholas DaRocha

Thanks to Zoey Lee, Everyoneishappy, Inigo Quilez, evvvvil, Frankenburger, Shane, the VVVV Team, and the whole shadertoy community for sharing their invaluable knowledge and expertise, without it, this project could have never been completed.

And of course my gratitude goes to the man / dj / superhero : Sherard Ingram aka the DJ Stingray 313

credits (see below)

Empty shop windows are boring. Especially in good locations, they are also unused presentation areas that can offer local businesses a valuable temporary stage.

The heart of the installation is a voluminous arrangement of controllable LED lights. Viewers can design individual messages using their smartphones and send them directly to the system. A jury regularly selects young companies and startups to be featured in the shop windows.

As soon as a passer-by stands on a marked point, the complex arrangement of lamps and light unravels for the viewer and an interactive ticker becomes legible. Via QR code and smartphone, own messages and crazy light effects can be controlled in the shop window.

WE. LOVE. LINZ.

Client:

City of Linz

Creative Region

Location:

Linz

Year:

2021

Concept:

Responsive Spaces,

Creative Region,

Michael Holzer

Design:

Responsive Spaces,

Michael Holzer

Realization:

Martin Zeplichal,

Nazila Shamsizadeh,

Andrea Maderthaner,

Michael Holzer

Photos & Video:

Nazila Shamsizadeh,

Eyup Kuş

credits netflix,pixelandpixel,colorsound

anonymous user login

Shoutbox

~11d ago

~26d ago

~26d ago

~28d ago

~30d ago

~1mth ago

~1mth ago

~1mth ago

~1mth ago

~2mth ago