This site relies heavily on Javascript. You should enable it if you want the full experience. Learn more.

Track your Head like Johnny Lee

demo

Credits: Johnny Lee patched by peter power

about

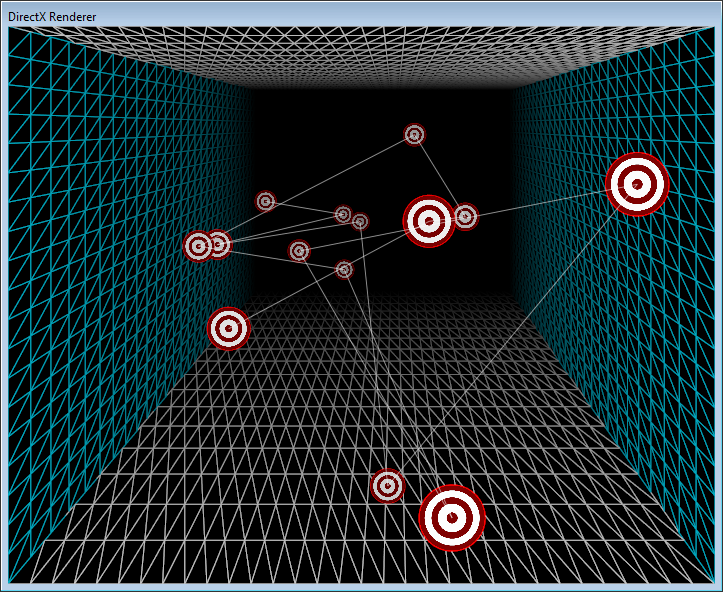

a copycat patch of Johnny Lee's Headtracking VR Demo.

http://www.youtube.com/watch?v=Jd3-eiid-Uw

Move your mouse to simulate your headtracking. This patch could be easily extended with some kinect headtracking or the new facetracker.

i tried to help a student today and patched this to get an understanding of it. PerspectiveLookAtRect does all the work.

I hope this might be of help for someone too.

download

anonymous user login

Shoutbox

~4d ago

joreg:

Workshop on 01 08: Augmented Reality using OpenCV, signup here: https://thenodeinstitute.org/courses/ss24-vvvv-augmented-reality-using-opencv-in-vvvv/

~12d ago

joreg:

Workshop on 18 07: Fluid simulations in FUSE, signup here: https://thenodeinstitute.org/courses/ss24-vvvv-fluid-simulations-in-fuse/

~12d ago

joreg:

Workshop on 17 07: Working with particles in FUSE, signup here: https://thenodeinstitute.org/courses/ss24-vvvv-working-with-particles-in-fuse/

~23d ago

joreg:

Here's what happened in June in our little univvvverse: https://visualprogramming.net/blog/2024/vvvvhat-happened-in-june-2024/

~25d ago

joreg:

We're starting a new beginner tutorial series. Here's Nr. 1: https://visualprogramming.net/blog/2024/new-vvvv-tutorial-circle-pit/

~26d ago

joreg:

Registration is open for LINK - the vvvv Summer Camp 24!

Full details and signup are here: https://link-summercamp.de/

~26d ago

joreg:

Workshop on 11 07: Compute Shader with FUSE, signup here: https://thenodeinstitute.org/courses/ss24-vvvv-compute-shader-with-fuse/

~1mth ago

joreg:

Workshop on 27 06: Rendering Techniques with FUSE, signup here: https://thenodeinstitute.org/courses/ss24-vvvv-rendering-techniques-with-fuse/

~1mth ago

joreg:

Workshop on 20 06: All about Raymarching with FUSE, signup here: https://thenodeinstitute.org/courses/ss24-vvvv-all-about-raymarching-with-fuse/

~1mth ago

joreg:

vvvv gamma 6.5 is out, see changelog: https://thegraybook.vvvv.org/changelog/6.x.html

Thanks... indeed useful.

Johnny Lee docet! :)

Check out http://vimeo.com/33088117 - the vid is 2D, but I use two renderers/PerspectiveLookAtRect offset by eye spacing to generate the stereo views and project it in full 3D. With the 9' wide rear projection, it is truly uncanny.

@mediadog: that is what I was talking about... can you please contribute the Kinect bit; need to do a head tracking for univ. VR/architectural research, desperado!

@metrowave: If you are using the skeleton tracking facility, then this is pretty easy. I use the Kinect/Xtion up in a corner of the screen though, so I process the point cloud to do the tracking. I did a very clunky but simple head tracker using my own seriously hacked version of the original dynamic Kinect plugin.

In the plugin I subsampled the point cloud data (by about 8 as I recall), and then looked for the highest group of points a certain distance away from the screen (so hands held up touching the screen wouldn't be followed). I also did a test tracking those points near the screen, assumed they were a hand, created a vector from that and the head, and then used Button(3D Mesh) to know what object(s) in the 3D VR space appeared to be behind their hand.

Not code I'm proud of, it has numerous problems and was just a proof of concept to see what VR with rear-projection 3D was like and how a full VR interaction space would work (not like Minority Report, I can assure you!), so I haven't posted it. But I am back to experimenting on something related, so I should be cleaning it up soon and making it more robust. I'm happy to answer any questions though with whatever limited knowledge I've gained so far.

Here's a quick version of u7angel's patch with Kinect head tracking. Just define your monitor/screen size, and set the offset from the center of the screen to where the Kinect is, and this should work. In the patch it is set for a screen 1 meter wide, with the Kinect sitting right underneath it.

YAAARGH! I can only attach a picture. Guess I'll have to make a new submission....

Thanks a lot mediadog: I'm very grateful that you contributed this. Also to u7angel: for starting the patch. I really needed a head tracking for a research project but didn't have a good idea how to approach it. This helped me a lot. best wishes to both...

@mediadog i'm trying to create a headtrack using kinect but the object always drifting or not stay in position, would you share how you do the headtrack with kinect?

hi, I am trying to replicate this effect in gamma+Stride but I noticed that when I add Z movement (getting closer or further away from screen) things don't look quite right.

In this patch if I move the Translate Camera's z value to move "into" the room, the objects in the back move away from me when they should move closer, when I move away from the room, the objects in the back come closer to me.

Any idea why this is happening and how I might be able to correct it?

Thanks.

@ravazquez Is that how it looks FROM the moving viewpoint? For if you are standing back looking at the image, when the viewpoint is getting closer to the screen, it is seeing a wider angle "through" the rectangle and it will look like objects are getting smaller. Conversely when the viewpoint is getting farther way, it is looking through a narrower angle and objects still in view will get larger on the screen. But when you are actually at the viewpoint, it should look correct because you are getting closer to the screen as objects get smaller to compensate, etc.

Oh and just for reference here's the old Kinect version:

track-your-head-wkinect

Thanks @mediadog, turns out after honing in all the measurements it does feel correct, it was a matter of precision in the end.

Cheers!